First, a short introduction to infinimesh, an Internet of Things (IoT) platform which runs completely in Kubernetes:

infinimesh enables the seamless integration of the entire IoT ecosystem independently from any cloud technology or provider. infinimesh easily manages millions of devices in a compliant, secure, scalable and cost-efficient way without vendor lock-ins.

We released some plugins over the last weeks - a task we had on our roadmap for a while. Here is what we have so far:

Elastic

Connect infinimesh IoT seamless into Elastic.

Timeseries

Redis-timeseries with Grafana for Time Series Analysis and rapid prototyping, can be used in production when configured as a Redis cluster and ready to be hosted via Redis-Cloud.

SAP Hana

All code to connect infinimesh IoT Platform to any SAP Hana instance

Snowflake

All code to connect infinimesh IoT Platform to any Snowflake instance.

Cloud Connect

All code to connect infinimesh IoT Platform to Public Cloud Provider AWS, GCP and Azure. This plugin enables customers to use their own cloud infrastructure and extend infinimesh to other services, like Scalytics, using their own cloud native data pipelines and integration tools.

We have chosen Docker as main technology, because it enables users to run their own plugins in their own space in their controlled environment. And, since our plugins don't consume so much resources, they fit perfectly into the free tiers of AWS EC2 - I use them here in that blog post.

The plugin repository was structured with developer friendliness in mind. All code is written in Go, and the configuration will be done on dockerfiles. Since you need to put credentials into, we highly advise to run the containers in a controlled and secure environment.

Stream IoT data to S3

Here I like to show how easy it is to combine IoT with already installed infrastructures in public clouds. The most used task we figured is the data stream to S3; most of our customers use S3 either directly with AWS, or by implementing their own object storage using the S3 protocol, like MinIO - which is also Kubernetes native.

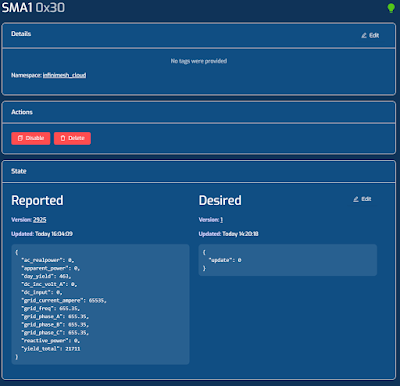

Of course a private installation of infinimesh or accounts on infinimesh.cloud and AWS are needed, if using the cloud version of both. Here is a screenshot from the SMA device I used to write this post:

Preparation

- Spin up an EC2 instance in the free tier with Linux, a t2.micro instance should fit mostly all needs

- Log into the VM and install docker as described in the AWS documentation: Docker basics for Amazon ECS - Amazon Elastic Container Service

- Install docker-compose and git:

sudo curl -L \https://github.com/docker/compose/releases/latest/download/docker-compose-$(uname -s)-$(uname -m)\-o /usr/local/bin/docker-compose \&& sudo chmod +x /usr/local/bin/docker-compose \&& sudo yum install git -y

That’s all we need as preparation, now log-off and login again to enable the permissions we have set earlier.

Setup and Run

- Clone the plugin - repo:

git clone https://github.com/infinimesh/plugins.git - Edit the CloudConnect/docker-compose.yml and replace CHANGEME with your credentials

- Compose and start the connector (-d detaches from the console and let the containers run in background):

docker-compose -f CloudConnect/docker-compose.yml --project-directory . up --build -d - Check the container logs:

docker logs plugins_csvwriter_1 -f

Some developer internals

We have built some magic around to make the use of our plugins as easy as possible for customers and at the same time easy to adapt for developers.

First we iterate over /objects, finding all endpoints marked with [device], call the API for each device and store the data as a sliding window into a local redis store, to buffer network latency. After some seconds we send the captured data as CSV to the desired endpoints. In our tests we transported data from up to 2 Million IoT devices over this plugin, each of those devices send every 15 seconds ten key:value pairs as JSON.

Comments

Post a Comment